Many education stakeholders and social commentators dislike the NAPLAN writing test. They think it (and the whole suite of annual tests) should be scrapped. NAPLAN undeniably influences classroom practices in a large number of Australian schools, and it’s also raised stress levels for at least some groups of students and teachers (Hardy & Lewis, 2018; Gannon, 2019; Ryan et al., 2021). These are valid concerns.

But as Australia’s only large-scale standardised assessment of writing, the test has the potential to provide unique and useful insight into the writing development, strengths, and weaknesses of Australia’s primary and secondary school populations (here’s an example). Added to this, the political value of NAPLAN, and the immense time, energy, and money that’s been poured into the tests since 2008 make it unlikely that the tests will be scrapped anytime soon.

Instead of outright scrapping the tests, or keeping them exactly as they are (warts and all), a third option is to make sure the tests are designed and implemented as well as possible to minimise concerns raised since their introduction in 2008. I’ve given the design of the NAPLAN writing test a great deal of thought over the past decade; I’ve even written a PhD about it (sad but true). In this post, I offer 3 simple fixes ACARA can make to improve the writing test while simultaneously addressing concerns expressed by critics.

1. Fix how the NAPLAN writing test assesses different genres

What’s the problem? At present, the NAPLAN writing test requires students to compose either a narrative or a persuasive text each year, giving them 40 minutes to do so.

Why is this a problem? The singular focus on narrative or persuasive writing is potentially problematic for a test designed to provide valid and reliable comparisons between tests over time. Those who have taught narrative and persuasive writing in classrooms will know these genres require often very different linguistic and structural choices to achieve different social purposes. It’s OK to compare them for some criteria, like spelling, but less so for genre specific criteria. ACARA know this too because the marking criteria and guide for the narrative version of the test (ACARA, 2010) are not the same as those for the persuasive writing version (ACARA, 2013). Even though the marking criteria for both versions are not identical, the results are compared as though all students completed the same writing task each year. There is a risk that randomly shifting between these distinct genres leads us to compare apples and oranges.

Also related to genre is the omission of informative texts (e.g., procedures, reports, explanations, etc.) in NAPLAN testing. Approaches to writing instruction like genre-based pedagogy, The Writing Revolution, and SRSD emphasise the importance of writing to inform. This is warranted by the fact that personal, professional, and social success in the adult world relies on being able to clearly inform and explain things to others. It’s not ideal that the significant time spent developing students’ informative writing skills across the school years is not currently assessed as part of NAPLAN.

What’s the solution? A better approach would be to replicate how the National Assessment of Educational Progress (NAEP) in the US deals with genres.

How would this improve NAPLAN? In the NAEP, students write two short texts per test instead of one, with these texts potentially requiring students to persuade (i.e., persuasive), explain (i.e., informative), or convey real or imagined experience (i.e., narrative). The NAEP is administered in Years 4, 8, and 12. Matching the typical development of genre knowledge (Christie & Derewianka, 2008), the Year 4 students are most likely to be asked to write narrative and informative texts, while those in Years 8 and 12 are most likely to write informative and persuasive texts. But students in all tested year levels can still be asked to write to persuade, explain, or convey experience, so knowledge about all the genres is developed in classrooms.

Why couldn’t we do something similar with NAPLAN? Including informative texts in our writing test would incentivise the teaching of a fuller suite of genres in the lead up to NAPLAN each year. Not including informative texts in NAPLAN is a little baffling since a large proportion of student writing in classrooms is informative.

2. Fix the NAPLAN writing prompt design

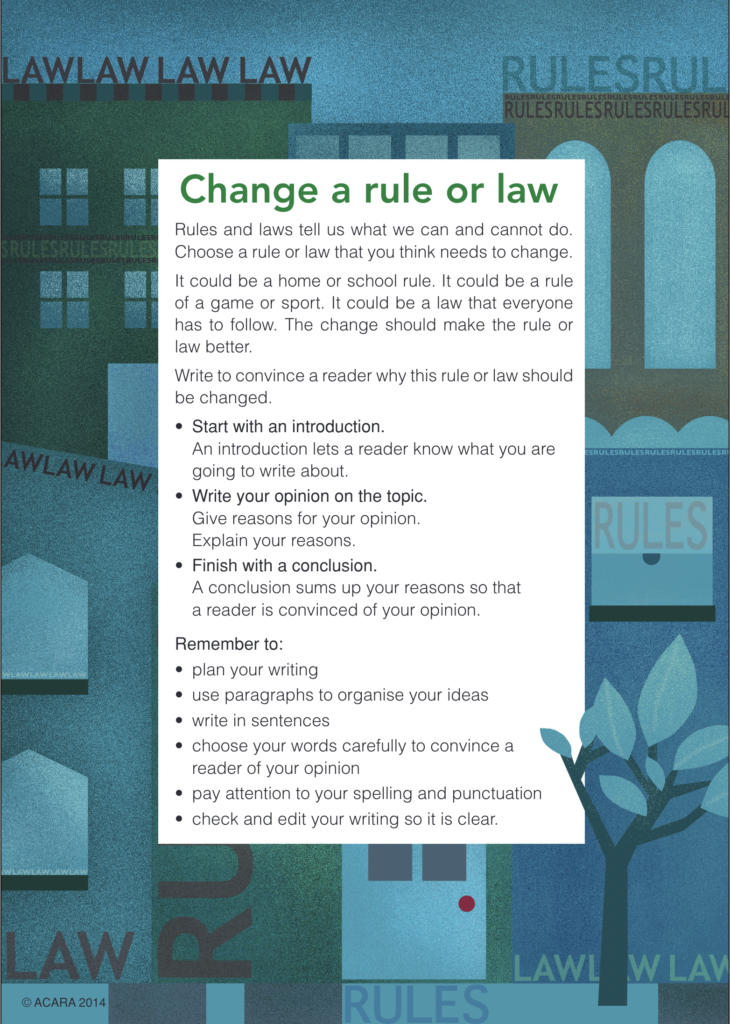

What’s the problem? At the start of every NAPLAN writing test, students receive a basic prompt that provides general information about the topic. Here’s an example from the 2014 test, which required a persuasive response:

As you can see, some useful information is provided about how children can structure their texts (e.g., Start with an introduction) and the sorts of writing choices they might like to make (e.g., choose your words carefully to convince a reader of your opinion). But how useful is this to a student who doesn’t have a lot of background knowledge about laws and rules?

My younger sister was in Year 5 in 2014 and she completed this writing test. She had previously been on two international school trips, and drew on these (and other) experiences to write an argument about raising Australia’s legal drinking age to 21, as it is in the US, and the many ways this would positively impact our society. Perhaps unsurprisingly, my sister achieved at the Year 9 standard for this persuasive text.

Why is this a problem? Compare my sister’s experience with a child from a lower socioeconomic area who had never been out of their local area, let alone Australia. It’s more challenging to suggest how rules or laws in our context should change if you don’t know about how these rules or laws differ in other places. The information provided in the prompt is far less useful if the child does not have adequate background knowledge about the actual topic.

Keeping the topic/prompt secret until the test is meant to make the test fairer for all students, yet differences in children’s life experiences already make a prompt like this one work better for some students than others. As an aside, in 2014 so many primary school students couldn’t answer this prompt that ACARA decided to write separate primary and secondary school prompts from 2015. This changed the test conditions in a considerable way, which might make it harder to reliably compare pre- and post-2014 NAPLAN writing tests, but I digress.

What’s the solution? A fairer approach, particularly for a prompt requiring a persuasive writing response, would be to provide students with select information and evidence for both sides of an issue and give them time to read through these resources. The students could then integrate evidence and expert opinion from their chosen side into their arguments (this is a fascinating process known as discourse synthesis, which I’d like to write about another time). Students could still freely argue whatever they liked about the issue at stake, but this would mean Johnny who never went out of his local area would at least have some information on which to base his ideas. Plus, we could potentially make the whole experience more efficient by making these supporting materials/evidence the same as those used to test students’ reading skills in the NAPLAN reading test.

How would this improve NAPLAN? Supporting information for the persuasive writing test (and the informative writing test if we can add that family of genres) would not need to be long: even just a paragraph of evidence on both sides would offer plenty for students to synthesise into their texts. We know that the test conditions and criteria influence what’s taught in classrooms, so there’s an opportunity to promote writing practices that will set students up for success in upper secondary and (for those interested) higher education contexts.

At the moment, students rarely include any evidence in their NAPLAN writing, even high-scoring students. Introducing some supporting information might help our students to get away from forming arguments based on their gut reactions (the kinds of arguments we encounter on social media).

3. Fix how the writing test positions students to address audiences

What’s the problem? Since 2008, NAPLAN writing prompts have had nothing to say about audience. Nothing in the prompt wording positions students to consider or articulate exactly who their audience is. Despite this, students’ capacity to orient, engage, and affect (for narrative) or persuade (for persuasive) the audience is one of the marking criteria. Put another way, we currently assess students’ ability to address the needs of an audience without the marker (nor perhaps the student) explicitly knowing who that audience is.

Why is this a problem? The lack of a specified audience leads many students to just start writing their narratives or persuasive texts without a clear sense of who will (hypothetically speaking) read their work. This isn’t ideal because the writing choices that make texts entertaining or persuasive are dependent on the audience. This has been acknowledged as a key aspect of writing since at least Aristotle way back in Ancient Greece.

Imagine you have to write a narrative on the topic of climate change. Knowing who you are writing for will influence how you write the text. Is the audience younger or older? Are they male or female? Do they like action, romance, mystery, drama, sports-related stories, funny stories, sad stories, or some other kind of story? What if they have a wide and deep vocabulary or a narrow and shallow vocabulary? There are many other factors you could list here, and all of these would point to the fact that the linguistic and structural choices we make when writing a given genre are influenced by the audience. The current design of NAPLAN writing prompts offers no guidance on what to do with the audience.

Others have noticed that this is a problem. In a recent report about student performance on the NAPLAN writing test, the Australian Education Research Organisation (AERO) (2022) described the Audience criterion as one of five that should be prioritised in classroom writing instruction. They argued: “To be able to write to a specific audience needs explicit teaching through modelling, and an understanding of what type of language is most appropriate for the audience” (p. 70). How can the marker know if a student’s writing choices are appropriate if an audience isn’t defined?

What’s the solution? A simple fix would be to give the students information about the audience to whom they’re entertaining, persuading, and/or informing. This is, again, how the NAEP in the US handles things, requiring that the “writing task specify or clearly imply an audience” (National Assessment Governing Board, 2010 p. vi). Audiences for the NAEP will be specified by the context of the writing task, age- and grade-appropriate, familiar to students, and consistent with the purpose identified in the writing task (e.g., to entertain) (see here for more). Another fix would be to ask students to select their own audience and record this somewhere above their response to the prompt.

How would this improve NAPLAN? Having more clarity around the intended audience of a written piece would position students to tailor their writing to suit specific reader needs. This would allow markers to make more accurate judgements about a child’s ability to orient the audience. If this isn’t fixed, markers will continue having to guess at who the student was intending to entertain or persuade.

Righting the writing test

Would these changes make the NAPLAN writing test 100% perfect? Well, no. There would still be questions about the weighting of certain criteria, the benefit/cost ratio of publicly available school results through MySchool, and other perceived issues (if anyone out there finds this interesting, I’d like to write about 3 more possible fixes in a future post). But the simple fixes outlined here would address several concerns that have plagued the writing test since 2008. This would influence the teaching of writing in positive ways and make for a more reliable and meaningful national test. The NAPLAN writing test isn’t going anywhere, so let’s act on what we’ve learnt from over a decade of testing (and from writing tests in other countries) to make it the best writing test it can be.

Seems pretty persuasive to me.

References

Australian Curriculum, Assessment & Reporting Authority. (2010). National Assessment Program – Literacy and Numeracy – Writing: Narrative marking guide. https://www.nap.edu.au/_resources/2010_Marking_Guide.pdf

Australian Curriculum, Assessment & Reporting Authority. (2013). NAPLAN persuasive writing marking guide. https://www.nap.edu.au/resources/Amended_2013_Persuasive_Writing_Marking_Guide-With_cover.pdf

Australian Education Research Organisation. (2022). Writing development: What does a decade of NAPLAN data reveal? https://www.edresearch.edu.au/resources/writing-development-what-does-decade-naplan-data-reveal/writing-development-what-does-decade-naplan-data-reveal-full-summary

Christie, F., & Derewianka, B. (2008). School discourse. Continuum Publishing Group.

Gannon, S. (2019). Teaching writing in the NAPLAN era: The experiences of secondary English teachers. English in Australia, 54(2), 43-56

Hardy, I., & Lewis, S. (2018). Visibility, invisibility, and visualisation: The danger of school performance data. Pedagogy, Culture & Society, 26(2), 233-248. https://www.doi.org/10.1080/14681366.2017.1380073

Ryan, M., Khosronejad, M., Barton, G., Kervin, L., & Myhill, D. (2021). A reflexive approach to teaching writing: Enablements and constraints in primary school classrooms. Written Communication. https://doi.org/10.1177/07410883211005558

Thankyou for another interesting read! In particular, your points around background knowledge and the importance of levelling the playing field here.

I would be interested in a future post about further fixes. Hopefully ACARA is equally as interested in the persuasive points you have made.

Hi Kristy. Thanks for your interest and I hope you’re well. It would indeed be great to see ACARA make these changes. In truth, ACARA ‘have’ made several large changes to the writing test over time that have altered the test conditions (e.g., deciding to keep the genre of the test secret until the test begins each year, introducing different prompts for primary and secondary students, moving writing from paper-based to online, etc.), so there’s no reason to think it can’t be further improved in the years ahead. It’s actually pretty unique to have a whole-of-population writing test like this one (other countries usually just test samples of students), so it really does have the potential to reveal a lot about writing development and where we should be putting our efforts. If you’re interested (and haven’t already seen it), I’d recommend reading through AERO’s recent report in NAPLAN writing, which is the first research I’ve seen that explains how students have performed for each NAPLAN writing criterion. Usually, we hear average scores for students or groups of students that lump together all the criteria, so it’s fascinating to see which aspects of writing seem to be causing most issues across the years of testing (here’s the link: https://bit.ly/3F9t5Up). Thanks again and have a great day!